Keras is a high-level deep learning library that enables users to build and train neural networks efficiently and effectively. Developed in Python, it runs on top of TensorFlow and aims to simplify the process of developing deep learning models by providing a user-friendly and intuitive API. As a result, Keras has become a popular choice within the machine learning community.

To understand how Keras works, it's crucial to grasp its architecture and the features it offers. Keras provides a modular, extensible approach to building neural networks, allowing you to create models by assembling predefined building blocks known as layers. This design makes it convenient to experiment quickly and iterate on models, ensuring a smooth transition from prototyping to deployment.

When working with Keras, you'll start by defining the structure of your neural network, followed by the compilation process where you specify the model's optimiser, loss function, and evaluation metric. Once the model is compiled, you'll train it on your data and evaluate its performance. Keras abstracts away many of the complexities of implementing neural networks, empowering you to focus on essential aspects like model architecture and tuning hyperparameters.

Fundamentals of Keras

Keras Architecture

Keras is a high-level, user-friendly API for building and training neural networks. It is an open-source library built in Python that runs on top of TensorFlow. Developed by François Chollet, Keras enables fast experimentation and iteration while lowering the barrier to entry for working with deep learning.

Keras has a modular architecture that allows you to easily define and manipulate layers, loss functions, optimisers, and metrics. It supports multiple backends, such as TensorFlow, Microsoft Cognitive Toolkit, and Theano.

Core Components

There are several core components in Keras that you should be familiar with when building your own deep learning models:

- Layers: The basic building blocks of neural networks in Keras. Each layer performs a specific operation on the input data, such as convolution, pooling, or activation. Keras offers a wide array of built-in layers, like Dense, Conv2D, and LSTM.

- Models: A model in Keras is a collection of layers that are connected in a specific architecture. Models can be trained, evaluated, and used for predictions. Keras offers two primary ways of defining models: the sequential and functional APIs, which we will discuss further in the next section.

- Loss Functions: These functions measure the difference between the predicted output and the actual output (ground truth) during the training process. Common loss functions include mean squared error, categorical cross-entropy, and binary cross-entropy.

- Optimisers: responsible for updating the model's weights based on the calculated loss. Common optimisers include stochastic gradient descent (SGD), RMSprop, and Adam.

- Metrics: Used for measuring the performance of a model during training and evaluation. Examples of metrics include accuracy, precision, and recall.

Sequential vs. Functional API

Keras offers two primary ways of defining neural network models:

1. Sequential API: It allows you to create models by simply stacking layers on top of each other. This approach is perfect for most use cases, especially when the model's architecture is relatively simple, with a single input and output.

from keras.models import Sequential from keras.layers import Dense model = Sequential() model.add(Dense(32, input_shape=(784,))) model.add(Dense(10, activation='softmax'))

2. Functional API: This API provides the flexibility to create models with more complex architectures, such as multiple inputs and outputs or shared layers.

from keras.layers import Input, Dense, concatenate from keras.models import Model input1 = Input(shape=(784,)) input2 = Input(shape=(784,)) x1 = Dense(32, activation='relu')(input1) x2 = Dense(32, activation='relu')(input2) merged = concatenate([x1, x2]) output = Dense(10, activation='softmax')(merged) model = Model(inputs=[input1, input2], outputs=output)

Determine which API suits your needs best and start building your neural networks with Keras!

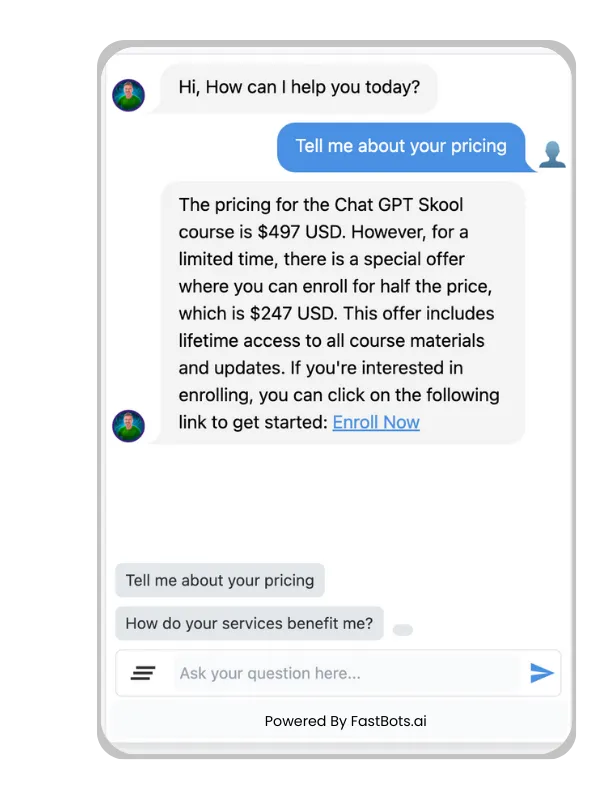

THE EASIEST WAY TO BUILD YOUR OWN AI CHATBOT

In less than 5 minutes, you could have an AI chatbot fully trained on your business data assisting your Website visitors.

Building a model

In this section, we will discuss how to build a model using Keras, a high-level neural network API written in Python. Keras is part of the TensorFlow library, which allows you to easily define and train deep learning models.

Defining Layers

First, you need to import the necessary libraries and methods:

import tensorflow as tf from tensorflow.keras import Sequential from tensorflow.keras.layers import Dense, Conv2D, MaxPool2D, Flatten

To create a neural network model in Keras, you'll use the Sequential class. This class allows you to define your model as a linear stack of layers. Here's an example of how to create a simple neural network with an input layer, a hidden layer, and an output layer:

model = Sequential([ Dense(128, input_shape=(784, ), activation='relu'), Dense(64, activation='relu'), Dense(10, activation='softmax') ])

In the example above, you start with a dense layer with 128 nodes, using the Rectified Linear Unit (ReLU) activation function, and an input shape of 784. The second layer has 64 nodes and also uses the ReLU activation function. Finally, the output layer has 10 nodes and uses the Softmax activation function.

For tasks such as image classification, you might want to use convolutional layers instead of dense layers. Here's an example of how to create a model with convolutional layers:

model = Sequential([ Conv2D(32, kernel_size=(3, 3), input_shape=(32, 32, 3), activation='relu'), MaxPool2D(pool_size=(2, 2)), Conv2D(64, kernel_size=(3, 3), activation='relu'), MaxPool2D(pool_size=(2, 2)), Flatten(), Dense(128, activation='relu'), Dense(10, activation='softmax') ])

Compiling the model

After defining the layers of your model, you need to compile it. Compiling the model means setting the optimizer, loss function, and metrics that Keras will use during training. Here's an example of how to compile your model:

model.compile(optimizer='adam', loss='categorical_crossentropy', metrics=['accuracy'])

In this example, we use the Adam optimizer, the categorical crossentropy loss function, and the accuracy metric to evaluate the performance of the model during training.

Once your model is compiled, you can train it using the fit method, evaluate its performance using the evaluate method, and make predictions using the predict method.

Data Preprocessing

In this section, we will discuss the essential steps for preparing your data before training a Keras model. Data preprocessing is a crucial part of the machine learning pipeline, and Keras provides various tools to make it easier for you. We will focus on two main aspects: data loading and data augmentation.

Data Loading

To build a Keras model, you need to load the data into a suitable format. This typically involves reading from files or databases and converting the raw input into tensors. Keras offers several utilities to streamline this process for common data formats, such as images and text. Here are some best practices to keep in mind:

- Use tf.data.Dataset objects: Keras works seamlessly with TensorFlow's tf.data API, which enables efficient data loading, caching, and shuffling. You can easily convert data from various sources into these objects.

- Employ Keras preprocessing layers: These embedded layers automatically handle common preprocessing tasks like tokenization for text and rescaling for images. You can include them directly in your model architecture for easier deployment and model exporting.

- Split data into train, validation, and test sets. It is crucial to separate your data into these three sets to assess your model's performance objectively and avoid overfitting.

For instance, if you are working with a text dataset, you can use the keras.preprocessing.text_dataset_from_directory() function to load data from a directory and create a tf.data.Dataset object.

Data Augmentation

Data augmentation is a technique to artificially enhance your dataset by creating new samples through transformations of the original data. This helps improve the model's generalisability and prevents overfitting. Keras provides several tools and layers for augmenting different types of data, such as images and text:

- Use ImageDataGenerator for images: Keras offers an ImageDataGenerator class that can apply various transformations to images, such as rotation, scaling, and flipping. This way, you can effortlessly increase the size and diversity of your image dataset.

- Leverage TextVectorization for text data: The TextVectorization layer in Keras can perform tokenization, stemming, and other common text preprocessing tasks. By combining this layer with custom Python functions, you can create augmented text data that challenges your model to learn more robust text representations.

To summarise, proper data preprocessing is vital for building effective Keras models. Use the built-in tools and layers provided by Keras to streamline the loading and augmentation of your data, ensuring that your models are robust and generalise well to new data.

Training and evaluation

In this section, we'll discuss how to train and evaluate your Keras models using built-in methods. Keras offers an easy-to-use interface for training and evaluation, making it simple for you to build and fine-tune your deep learning models.

Model Fitting

To train a Keras model, you'll use the fit() method. This method requires input data, labels, and other important parameters such as batch size, number of epochs, and validation data. Here's a brief overview of these key parameters:

- Input data: Your training dataset, which consists of input features and corresponding target labels,.

- Batch size: The number of samples to process before updating model weights. A smaller batch size can lead to a more fine-grained weight update, while a larger batch size can speed up training.

- Number of epochs: The number of times the model goes through the entire dataset. Increasing the number of epochs can improve model performance, but it may also lead to overfitting.

Here's an example of how to fit a model:

model.fit(x_train, y_train, batch_size=32, epochs=10, validation_data=(x_val, y_val))

Model Evaluation

Once your model has been trained, you'll want to evaluate its performance on unseen data. Keras provides the evaluate() method to assess the model's accuracy and other metrics. To evaluate a model, you'll need a separate dataset, typically called a test set. These are examples that your model has not seen during training and provide a means for you to gauge the model's generalisation capability.

Here's an example of how to evaluate a model:

score = model.evaluate(x_test, y_test, batch_size=32)

The evaluate() method returns metrics such as loss and accuracy. You can also use the predict() method to make predictions on new data.

predictions = model.predict(x_new)

Remember that Keras works consistently across sequential models, models built with the Functional API, and models written from scratch via model subclassing. By understanding the built-in tools for training and evaluation, you'll be able to create and fine-tune your deep learning models with ease.

Advanced Features

Custom Callbacks

Keras allows you to create custom callbacks that can perform specific actions at different stages of the training process. A callback is a Python class that inherits from the keras.callbacks.Callback base class. By creating a custom callback, you can monitor model performance, stop training early, or even modify the model's weights.

To create a custom callback, you need to define one or more methods, such as on_epoch_begin, on_epoch_end, on_batch_begin, and on_batch_end. These methods will be called at the relevant stages of the training process. Here's an example of a simple custom callback that prints the training loss at the end of each epoch:

from keras.callbacks import Callback class PrintLossCallback(Callback): def on_epoch_end(self, epoch, logs=None): print(f"Epoch {epoch}: Loss {logs['loss']:.4f}")

TensorBoard Integration

Keras provides seamless TensorBoard integration to help you visualise your models, track their performance, and debug issues during training. TensorBoard is a powerful visualisation tool that comes with TensorFlow, and it can be easily integrated with your Keras models.

To use TensorBoard with Keras, import the TensorBoard callback from keras.callbacks and add it to your model's fit() method:

from keras.callbacks import TensorBoard # Pass the log directory to the TensorBoard callback tensorboard_callback = TensorBoard(log_dir='./logs') # Add the callback to the fit() method model.fit(x_train, y_train, epochs=10, callbacks=[tensorboard_callback])

This will save all the necessary data for TensorBoard in the specified log_dir. To view the visualisations, launch TensorBoard from the command line specifying the log directory:

tensorboard --logdir ./logs

This will start a TensorBoard server, and you can view the visualisations by navigating to the URL displayed in your terminal.

In summary, advanced features such as custom callbacks and TensorBoard integration enable you to gain deeper insights into your models' performance and make your experimentation process more efficient. Keras' rich functionality empowers you to build and refine your models quickly and effectively.

Frequently Asked Questions

What are the fundamental steps involved in using Keras for deep learning?

1. Prepare the data: Preprocess your data by cleaning, normalising, and encoding it to make it suitable for training a neural network.

2. Define the model: Design the architecture of your neural network using Keras' high-level API, specifying layers, activation functions, and network shape.

3. Compile the model: Choose an optimiser, loss function, and evaluation metrics that best suit your deep learning project.

4. Train the model: Provide the model with training data and adjust the model's weights through a specified number of training iterations (epochs) to minimise the loss.

5. Evaluate and improve: Assess your model's performance using validation data and fine-tune its hyperparameters or architecture as needed.

6. Deploy: Export the trained model for use in production environments or further experimentation.

Can you explain the role of Keras in Python-based deep learning projects?

What are the primary applications Keras is used for in machine learning?

- Image and video recognition

- Natural language processing

- Sentiment analysis and text classification

- Time series forecasting

- Anomaly detection

- Recommender systems

Its versatile and user-friendly nature makes Keras a popular choice for both beginners and experts in machine learning.

How does Keras interface with TensorFlow to build and train models?

What are the key differences between Keras and PyTorch as deep learning frameworks?

Keras:

- High-level, user-friendly API

- Default support for TensorFlow as its backend

- Static computation graph

- Focuses on simplicity and ease of use

- Preferred for rapid prototyping, teaching, and smaller projects

PyTorch:

- Dynamic computation graph

- Provides greater flexibility in building complex models

- Preferred for research due to its dynamic nature and detailed customisation options

- Built-in support for advanced GPU utilisation

- Offers greater debugging capabilities

The choice between Keras and PyTorch depends on your project requirements, expertise, and personal preference.

Could you outline the basic principles underlying the Keras architecture?

1. Layers: The building blocks of neural networks, implemented as Python classes. Keras offers a large variety of pre-built layers that can be combined to create bespoke architectures.

2. Models: Represent the neural network itself. Keras provides two main model types - the Sequential model for linearly stacked layers, and the Model class for more complex, multi-input or multi-output architectures.

3. Optimisers: Algorithms that adjust the model's weights to minimise the loss function. Keras includes a wide selection of optimisers, such as gradient descent, Adam, and RMSProp.

4. Loss functions: Measure the difference between the predicted output and the actual target values. Keras supports various loss functions, like mean squared error and categorical cross-entropy, to suit diverse problem types.

THE EASIEST WAY TO BUILD YOUR OWN AI CHATBOT

In less than 5 minutes, you could have an AI chatbot fully trained on your business data assisting your Website visitors.