In today's rapidly evolving business landscape, it's essential to leverage artificial intelligence (AI) to stay ahead of the competition. One powerful tool at your disposal is TensorFlow, an open-source library and versatile framework for developing, training, and deploying machine learning models. This guide will help you understand how to effectively use TensorFlow for various business purposes.

To begin with, TensorFlow takes advantage of languages like C, C++, Python, Java, and JavaScript, making it fast and compatible with various platforms. The Lite version even allows TensorFlow to run on mobile applications and embedded systems. By incorporating TensorFlow into your business strategy, you can gain insights and make data-driven decisions to improve efficiency, performance, and the customer experience.

As you explore TensorFlow, you'll come across essential components such as tensors, which are multi-dimensional arrays. Mastering these foundational concepts will enable you to harness the framework's full potential and apply it to various business scenarios, from automating and tracking model training to developing AI-powered solutions that directly benefit your customers and operations.

Understanding TensorFlow and Its Business Applications

Essentials of TensorFlow

TensorFlow is a powerful, open-source software library developed by Google for building and training machine learning and deep learning models. It provides a flexible, efficient, and scalable platform that enables you to create neural networks and deploy them across different environments, such as servers, edge devices, browsers, and mobile devices.

Some essential features of TensorFlow include:

- Tensors are multi-dimensional arrays that store data and are the basic building blocks of TensorFlow computations.

- Keras API: A high-level interface for designing and training machine learning models, making it easier for beginners and researchers.

- GPU support: accelerate training and inference using CUDA-enabled graphics cards.

- Deployment options: versatile deployment capabilities on various platforms, including on-premise, cloud, and edge devices.

Business Use Cases

TensorFlow offers numerous applications that can benefit businesses in various industries.

Some notable use cases are:

1. Image recognition: Implementing advanced image recognition systems for quality control, security, or product identification.

2. Natural language processing (NLP): analysing and understanding customer feedback, sentiment analysis, or chatbot development.

3. Recommendation systems: creating personalised recommendations to improve the user experience, increase customer retention, and boost sales.

4. Predictive analytics: forecasting trends and customer behaviour to optimise business operations and reduce costs.

5. Fraud detection: monitoring and analysing transactions in real-time to identify potential fraud and enhance security.

Strategic Advantages

By integrating TensorFlow into your business operations, you can gain several competitive advantages:

- Scalability: TensorFlow allows for the development of complex models that can handle large volumes of data, ensuring your business can scale as it grows.

- Optimisation: The platform offers various tools and techniques to fine-tune your model, leading to improved accuracy and better results.

- Flexible deployment: Deploy your machine learning models wherever needed, whether it's on a server, edge device, browser, or mobile platform, to meet business requirements.

- Customisation: Build tailored solutions to address specific challenges and problems unique to your business, thereby gaining a competitive edge.

Incorporating TensorFlow into your business operations can lead to more informed decision-making, streamlined processes, and the ability to stay ahead in a rapidly evolving market.

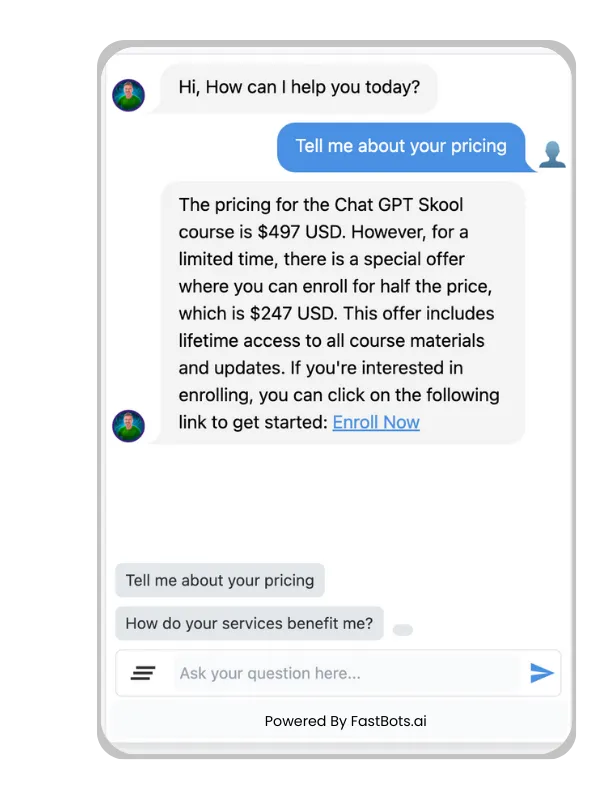

THE EASIEST WAY TO BUILD YOUR OWN AI CHATBOT

In less than 5 minutes, you could have an AI chatbot fully trained on your business data assisting your Website visitors.

Setting Up Your TensorFlow Environment

Installation and Configuration

To get started with TensorFlow, follow these steps:

1. Install the package: You can either install the TensorFlow package with pip or build it from source. Make sure to choose the right version depending on whether you have GPU support or not.

2. Migrate to TensorFlow 2: If you've used TensorFlow 1.x in the past, learn how to migrate your code to TensorFlow 2. The new version has several improvements, making it simpler and more efficient.

3. Explore Keras: Keras is a high-level API included in TensorFlow. It's perfect for ML beginners and researchers alike, providing a user-friendly interface for building neural networks.

4. Master TensorFlow basics: Familiarise yourself with the fundamental concepts and classes that make TensorFlow work.

5. Learn about data-input pipelines. Understand how to create efficient pipelines to feed data into your models. TensorFlow offers several data input options for machine learning.

Choosing the Right Hardware

Selecting the appropriate hardware is essential to maximising TensorFlow's performance. Consider the following options:

- CPU: TensorFlow can run on a wide range of CPUs, from basic to high-end processors. However, for complex deep learning tasks, CPUs may be slower than GPUs.

- GPU: GPUs are highly parallelised, making them ideal for training large neural networks. TensorFlow supports CUDA-enabled NVIDIA cards, which significantly boost processing power for deep learning tasks.

- Tensor Processing Units (TPUs) are the next generation of AI accelerators designed by Google. They offer even faster calculations than GPUs and work seamlessly with TensorFlow. You can access TPUs through Google Cloud.

Remember, choosing the right hardware for your TensorFlow projects depends on factors such as budget, model complexity, and processing power requirements. Take the time to research and compare different options before making a decision.

Developing TensorFlow Models for Business

Data Preparation

Before diving into TensorFlow model development, it's crucial to prepare your data. This ensures higher-quality models and better performance. Firstly, collect all the relevant data from your business domain. Remember that data quality is key, so clean and preprocess the data, removing any irrelevant features or outliers.

Secondly, split the data into training, validation, and testing sets. A typical ratio is 70% for training, 15% for validation, and 15% for testing. To avoid overfitting or underfitting, perform feature scaling on your input data. This includes techniques like normalisation and standardisation.

Model Training

Once your data is prepared, it's time to train your TensorFlow model. There are a variety of TensorFlow APIs available to accomplish this task. Some popular choices include:

- Keras is a high-level API for developing deep learning models.

- Estimators: A high-level API for creating complete ML models.

- Custom Models: For advanced users, you can build models from scratch using TensorFlow's low-level APIs.

Choose the API that best suits your business needs and expertise. After defining the architecture of your model, compile it with an optimiser, loss function, and metrics to gauge performance. Finally, fit the model to your training data with the desired number of epochs and batch size.

Model Evaluation

The last step in TensorFlow model development is model evaluation. This helps you assess the model's performance and generalise it to new data. Use the validation data for hyperparameter tuning and early stopping, ensuring that the model does not overfit.

Once satisfied with the performance of the validation data, evaluate your model on the testing data. This provides an unbiased estimate of how well the model will perform with real-world data. Common evaluation metrics include:

- Classification: accuracy, precision, recall, F1-score

- Regression: Mean Absolute Error (MAE), Mean Squared Error (MSE), R-squared

The TensorFlow model is now ready for deployment in your business environment. Remember to monitor its performance regularly and make necessary adjustments to guarantee continuous improvements.

Deploying TensorFlow Solutions in Production

In this section, we will discuss how to deploy your TensorFlow models in a production environment. Businesses can leverage the power of machine learning (ML) to solve complex problems and improve decision-making.

Deployment Strategies

When it comes to deploying TensorFlow models in production, there are several strategies that you can choose from:

1. TensorFlow Serving: This is a flexible, high-performance serving system for ML models, designed for production environments. TensorFlow Serving allows for easy integration with your existing infrastructure, providing APIs for both gRPC and REST.

2. TFX, or TensorFlow Extended, is an end-to-end platform for deploying production ML pipelines. It consists of a set of components and libraries that coordinate the process from data ingestion to model deployment. With TFX, you have access to best practices for deploying ML solutions at scale.

3. Cloud-based solutions: Cloud platforms like Google Cloud AI Platform or AWS Sagemaker provide managed services to deploy, monitor, and scale TensorFlow models with minimal effort. These solutions take care of the infrastructure management, allowing you to focus on the core ML aspects.

Scaling and managing models

In order to ensure the successful deployment of your TensorFlow models in production, it is crucial to focus on scaling and managing your models effectively. Here are some key points to keep in mind:

- Versioning: Keep track of different versions of your models by applying proper versioning strategies. This allows you to roll out new updates without affecting the existing systems, and even rollback to previous versions if necessary.

- Monitoring: Continuously monitor the performance of your deployed models to detect any potential issues or degradation in performance. Tools like TensorBoard can be helpful for visualising the training and evaluation metrics of your models.

- Load balancing: Distribute the incoming requests across multiple instances of your models to balance the load and ensure optimal performance. This can be achieved using tools like Kubernetes or through managed cloud solutions like Google Cloud AI Platform or AWS Sagemaker.

- Auto-scaling: Implement auto-scaling strategies to scale the number of instances of your models based on the incoming traffic. This ensures that your deployments can handle increased workloads without manual interventions.

By following these best practices, you will be able to deploy and scale your TensorFlow models effectively in production, harnessing the power of ML to drive valuable insights and enhance decision-making for your business.

Best Practices and Considerations

Security and privacy

When using TensorFlow for business purposes, it's crucial to consider security and privacy. To ensure your data remains protected, follow these guidelines:

- Store sensitive data in a secure environment, and limit access to only authorised personnel.

- Anonymise the data used for training your model by removing personally identifiable information.

- Keep your TensorFlow libraries updated to ensure the latest security patches are applied.

Performance Optimisation

To achieve optimal performance with TensorFlow in a business setting, pay attention to these aspects:

- Select the appropriate hardware: Utilise GPUs, TPUs, or distributed systems to accelerate training and inference times.

- Optimise TensorFlow code: Implement performance-enhancing techniques such as model pruning, quantisation, and mixed-precision training.

Technique Description

Model pruning Remove insignificant weights to reduce the model size.

Quantisation Reduce the precision of weights and activations.

Mixed-precision training Use both lower- and higher-precision arithmetic.

- Monitor performance: Regularly analyse your model's performance to identify bottlenecks and areas for improvement.

Maintaining and updating models

Proper maintenance of your TensorFlow models is essential for ensuring ongoing value for your business.

1. Evaluate your model. Continuously measure the performance of your model using appropriate metrics.

2. Monitor drift: Keep an eye on changes in your model's input data to detect any drift, which may affect its accuracy over time.

3. Retrain and refine: Periodically retrain your model with new data to ensure it stays updated and captures recent trends.

By adhering to these best practices and considerations, you can effectively implement TensorFlow for your business and achieve desired outcomes.

Frequently Asked Questions

How can TensorFlow be applied to commercial ventures?

What are practical examples of TensorFlow applications in industry?

Where can I find tutorials to learn about implementing TensorFlow in my business?

Is TensorFlow suited for professional-grade machine learning projects?

Can TensorFlow be integrated with Python for enterprise-level solutions?

Where is the official TensorFlow documentation for in-depth learning?

THE EASIEST WAY TO BUILD YOUR OWN AI CHATBOT

In less than 5 minutes, you could have an AI chatbot fully trained on your business data assisting your Website visitors.