Natural language understanding (NLU) is a crucial aspect of artificial intelligence (AI) focused on enabling machines to comprehend and interpret human language. As a beginner in this field, it is essential to understand the fundamentals of NLU and how it is driving breakthroughs in conversational AI. This step-by-step guide aims to provide you with valuable insights on building NLU models, working with the available tools, and overcoming the challenges associated with NLU.

In today's data-driven world, NLU plays a significant role in facilitating communication between humans and computers. By accurately processing written and verbal text, creating structured data, and recognising intents and entities, NLU systems allow machines to perform tasks and interact with users in a more human-like manner. This not only improves the user experience but also creates opportunities for automation and efficiency across various industries.

As you progress through this guide, you will learn about prominent NLU tools, frameworks, and techniques that will help you better understand how to develop and implement natural language understanding models. Whether you're a beginner in AI or an experienced professional, this guide serves as a comprehensive resource to enhance your knowledge and expertise in NLU.

Understanding NLU: The Basics

What is Natural Language Understanding?

Natural Language Understanding (NLU) is a subfield of Natural Language Processing (NLP) that focuses on computers comprehending human language. The goal of NLU is to extract meaning from unstructured text or verbal communication, such as intent, named entities, or other aspects of human language. By doing so, computers can interact with humans more effectively and efficiently. Some common NLU techniques include tokenization, which breaks up text into individual words or phrases, and named entity recognition, used for identifying specific entities like people, places, or dates.

The importance of NLU in AI

NLU plays a crucial role in artificial intelligence (AI), as it enables computers to understand and process human language. This ability is significant in a range of applications, including:

1. Chatbots and virtual assistants: NLU allows them to respond accurately to user queries by understanding intent and context.

2. Sentiment analysis: NLU aids in determining the emotional tone of the text, enabling businesses to gauge customer feedback, product reviews, and social media content.

3. Information extraction: NLU helps in extracting specific information from large text-based datasets for data analysis and decision-making.

In summary, NLU is an essential component of AI that bridges the communication gap between humans and computers. It promises to revolutionise fields like customer service, research, and analytics by providing enhanced capabilities to understand and process natural language data.

Setting Up the Development Environment

In this section, we will guide you through setting up the development environment for building natural language understanding (NLU) models. It's essential to have the right tools and software installed to ensure a smooth and efficient workflow.

Required software and tools

To start with, let's take a look at some of the essential tools and software needed for NLU development:

1. Operating System: Windows, macOS, and Linux are the most common options. Choose an OS based on your personal preference and system compatibility.

2. Integrated Development Environment (IDE): IDEs facilitate code writing, debugging, and project management. Some popular IDEs include Visual Studio Code, PyCharm, and Sublime Text.

3. Python: Most NLU models are built using Python as the programming language. Python is a versatile language with extensive libraries and a large community of developers. Ensure you have the latest stable version of Python installed.

4. NLU Libraries: There are several open-source NLU libraries available, such as Rasa NLU, SpaCy, and NLTK, which simplify NLU model building.

Installation and configuration steps

With the required tools and software identified, follow these steps to set up your development environment:

1. Install the Operating System: Ensure that you have the latest version of your chosen operating system installed. Keep your OS up-to-date to avoid compatibility issues.

2. Install an IDE: Download and install your preferred IDE to streamline your coding, testing, and debugging processes.

3. Install Python: Visit Python's official website to download and install the latest Python version.

4. Install NLU Libraries: Utilise Python's package manager, pip, to install the NLU libraries. For example, to install Rasa NLU, open your terminal or command prompt and type pip install rasa-nlu.

5. Configure Settings: Configure the settings in your IDE for the specific NLU library you've chosen. This may include adjusting interpreters, configuring linters, and setting up environment variables.

6. Explore Tutorials and Documentation: Familiarise yourself with documentation and tutorials for your chosen NLU library to help jumpstart your project.

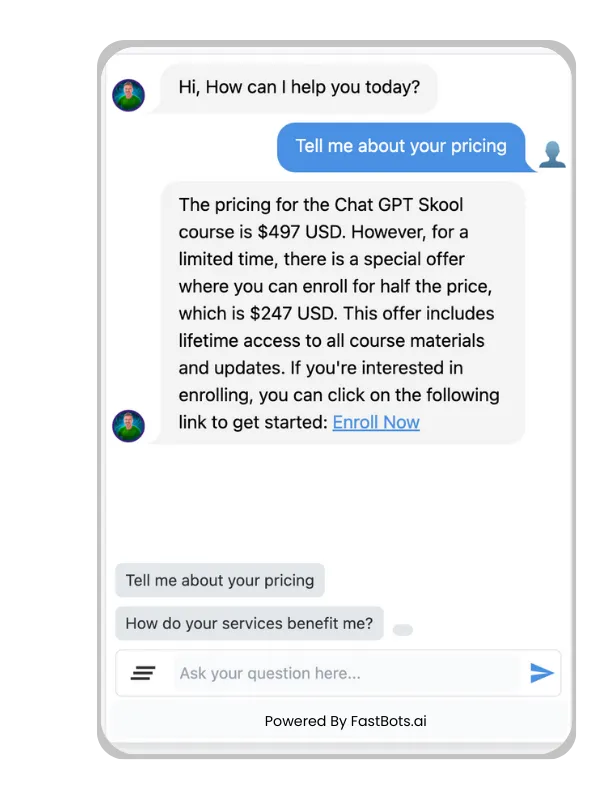

THE EASIEST WAY TO BUILD YOUR OWN AI CHATBOT

In less than 5 minutes, you could have an AI chatbot fully trained on your business data assisting your Website visitors.

Building blocks of NLU systems

In this section, we'll briefly discuss the essential components of Natural Language Understanding (NLU) systems.

Text Preprocessing and Tokenisation

The first step in building an NLU system is text preprocessing and tokenisation. In this stage, you'll clean and prepare the raw text data for further processing by:

- Removing unnecessary characters, such as punctuation marks and special symbols

- Converting all text to lowercase for consistency

- Tokenising the text, which involves breaking the text into individual words or tokens

A typical example of tokenisation is converting the sentence "The quick brown fox jumps over the lazy dog" into a list of words: ['the', 'quick', 'brown', 'fox', 'jumps', 'over', 'the', 'lazy', 'dog'].

Word Embeddings and Vectorisation

The next step is word embeddings and vectorisation. In this stage, you'll convert words into numerical representations, or vectors. One popular method of achieving this is using pre-trained embeddings, such as Word2Vec or GloVe. Alternatively, you can use algorithms like TF-IDF (Term Frequency-Inverse Document Frequency) and Bag of Words.

These word embeddings enable the NLU model to understand the semantic relationships between words. For example, similar words will have closely related vectors, allowing the model to make accurate predictions while processing the text.

Intent recognition and entity extraction

The final building blocks of an NLU system are intent recognition and entity extraction. The primary goal of this stage is to identify how the text should be understood by the model.

- Intent recognition involves determining the underlying purpose or intention of the user's input. For instance, the intent of the sentence "I would like to book a flight" might be recognised as book_flight.

- Entity extraction refers to the identification and categorisation of essential data elements within the text. In the earlier example, the entities could be origin, destination, and date.

Together, these components enable your NLU system to understand a user's input and make appropriate actions or responses.

Designing an NLU Model

In this section, we will discuss how to design an NLU (Natural Language Understanding) model by choosing the right algorithm, preparing training and testing data, and evaluating model accuracy.

Choosing the Right Algorithm

Selecting the appropriate algorithm for your NLU model is essential for achieving optimal performance. There are several algorithms available for natural language processing, such as Hidden Markov Models (HMM), Support Vector Machines (SVM), and Deep Learning Algorithms like Long Short-Term Memory (LSTM) and Transformers. Each algorithm has its own strengths and weaknesses, and the suitability depends on the specific requirements of your application. Here is a brief comparison of these algorithms:

Hidden Markov Models (HMM): These are suitable for simple sequence labelling tasks but may not perform well with complex language patterns.

Support Vector Machines (SVM): SVMs work well for binary classification tasks; however, they may not be the best choice for multi-class language understanding.

Deep Learning Algorithms: These models, such as LSTMs and Transformers, are powerful for text-understanding tasks due to their ability to handle context and complex language features. However, they require large amounts of training data and computational resources.

Training and testing data

Properly preparing training and testing data is crucial for building an effective NLU model. Follow these steps to prepare the data:

1. Data Collection: Gather a variety of text data relevant to the domain and application of your NLU model. This data will form the basis of your training dataset.

2. Data Preprocessing: Clean and preprocess the collected text data by performing tasks such as removing irrelevant content, tokenising text, converting text to lower case, and eliminating stop words.

3. Annotation: Annotate the preprocessed data with appropriate intents and entities, which represent the user’s intended actions and relevant information, respectively. This will form your labelled training dataset.

4. Data Splitting: Divide your labelled dataset into training and testing sets, typically using a 70% to 30% or 80% to 20% split. Ensure that both sets have a similar distribution of intents and entities.

Evaluating Model Accuracy

Assessing the performance of your NLU model is crucial to ensuring its effectiveness in understanding users' intents and extracting entities. Some commonly used evaluation metrics are:

Precision: It measures the percentage of true positives out of the total predicted positives.

Recall: It quantifies the percentage of true positives out of actual positives.

F1-score: This is the harmonic mean of precision and recall, providing a balance between these two metrics. It's especially valuable when dealing with imbalanced datasets.

Remember, continuous improvement and fine-tuning of your NLU model are vital for optimal performance. Regularly evaluate your model, update your training data, and iterate as necessary.

Advancing Your NLU Skills

As you become more comfortable with the basics of natural language understanding (NLU), it's time to explore advanced techniques and stay updated with industry trends. This will help you expand your NLU knowledge and sharpen your skills.

Advanced Techniques and Optimisations

To dive deeper into NLU, explore the following advanced techniques:

- Transfer Learning: Utilise pre-trained models and fine-tune them for your specific needs, saving you time and resources.

- Active Learning: Prioritise data that requires human annotations to enhance your model's accuracy more effectively.

- Data Augmentation: Increase the diversity of your training dataset by applying various transformations, such as synonym replacement and back-translation, ensuring your model's robustness.

Be sure to keep the following best practices in mind as you employ these advanced techniques:

1. Continuously test and refine your models to ensure optimal performance.

2. Regularly update your training data, and focus on providing quality data to improve your model's understanding.

3. Aim for a balance between specificity and generalisation in your intents and entities, avoiding overcomplicated phrases.

Staying up-to-date with industry trends

To stay current in the rapidly evolving field of NLU, consider the following:

- Follow influencers and researchers: Keep an eye on the work of AI and NLU researchers to learn about the latest advancements and methodologies.

- Read Industry Blogs: Regularly read blogs from reputable sources, such as BotPenguin, Voiceflow, and Mindbreeze, for insights, tips, and guides on NLU technologies.

- Participate in Conferences and Webinars: Attend industry conferences and webinars to learn from experts and network with other professionals.

- Join Online Forums and Communities: Engage with others in AI and NLU-focused forums and communities to learn from peers, share knowledge, and discuss trending topics.

By exploring advanced techniques, optimising your NLU models, and staying updated on industry trends, you'll further develop your expertise in natural language understanding.

Frequently Asked Questions

What are the fundamental principles behind natural language understanding?

How can one accurately identify intents and entities in textual data?

What are the initial steps one should take when starting with natural language understanding?

Could you outline the process of training a model for natural language understanding?

1. Gather a diverse dataset consisting of text data with labelled intents and entities.

2. Preprocess your data, which may involve tokenization, stemming, or lemmatisation.

3. Split your data into training and testing sets to evaluate the model's performance.

4. Choose an appropriate machine learning algorithm, such as decision trees or neural networks.

5. Train your model using the training set, adjusting hyperparameters as necessary.

6. Evaluate your model's performance on the testing set.

What tools and resources are essential for beginners in the field of natural language understanding?

How does one evaluate the effectiveness of a natural language understanding system?

THE EASIEST WAY TO BUILD YOUR OWN AI CHATBOT

In less than 5 minutes, you could have an AI chatbot fully trained on your business data assisting your Website visitors.