Transformer models have taken the world of machine learning by storm, providing a powerful and flexible architecture that has revolutionised the field of natural language processing (NLP) and beyond. To grasp how they work, it is essential to understand their core ideas and components.

At the heart of the Transformer model is the concept of "attention," which effectively allows the model to "focus" on different parts of the input data, determining the necessary context and relationships. With the addition of self-attention mechanisms and feedforward neural networks, the model can process input in parallel, greatly improving computational efficiency.

The transformer is composed of two primary components: the encoder and the decoder. The encoder processes the input data, while the decoder generates the output. By understanding each component's functions and the role of attention in the model, you will be able to appreciate the power and versatility of Transformer models in tackling a wide range of tasks in the machine learning domain.

Fundamentals of Transformer Models

Key Concepts and Terminology

Transformer models are a type of neural network that primarily uses attention mechanisms to understand and generate context from sequential data, such as text or time series data. Let's dive into some fundamental concepts:

- Tokens are individual units of your input data. In the case of text, tokens can be characters, words, or even subwords.

- Self-attention is the mechanism through which the model can weigh and focus on different parts of the input data, helping it capture the dependencies between tokens.

- Encoder: A part of the transformer that processes the input data and extracts contextual information.

- Decoder: A part of the transformer that generates the output based on the contextual information provided by the encoder.

Architecture Overview

Here's a brief overview of the Transformer model's architecture:

1. Input: Your input data is first tokenized and passed through an embedding layer to create a continuous representation of the tokens.

2. Positional Encoding: Positional information is added to the embeddings to retain the input sequence's order.

3. Encoder Layers: The input embeddings pass through multiple identical encoder layers, each consisting of two sub-layers: multi-head attention and feedforward neural networks (FFNN).

- Multi-head Attention: This sub-layer allows the model to learn different attention patterns to capture dependencies and relationships between tokens in the input sequence.

- Feedforward Neural Network: A small FFNN that passes the output of the attention mechanism through a series of hidden layers to refine the encoding.

4. Decoder Layers: Contextual information from the encoder is used by several identical decoder layers to generate the output. These layers also include masked multi-head attention and additional multi-head attention to process the previous output before the FFNN layer.

5. Output: The final output from the decoder is passed through a linear layer and softmax function to generate probabilities identifying the most likely output tokens.

By combining these key components, transformer models can efficiently process and generate context-rich information from sequential data, making them powerful tools in natural language processing, translation, and generation tasks.

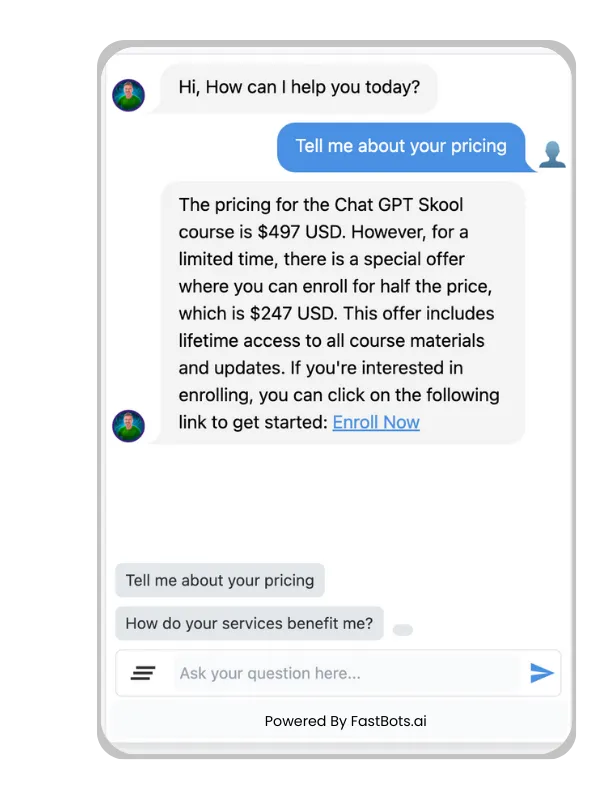

THE EASIEST WAY TO BUILD YOUR OWN AI CHATBOT

In less than 5 minutes, you could have an AI chatbot fully trained on your business data assisting your Website visitors.

Mechanics of Attention

In this section, we will discuss the core concepts behind the attention mechanism in Transformer models, focusing on two key components: the self-attention mechanism and scaled dot-product attention.

Self-Attention Mechanism

The self-attention mechanism is a key innovation in transformer models that allows them to process input sequences more efficiently. It enables the model to focus on different parts of the input sequence, depending on the context.

To achieve this, the mechanism computes queries, keys, and values for each input token. Each of these components has a specific role:

- Queries allow the model to identify which input tokens are relevant for the current context.

- Keys provide a mapping between input tokens and their importance in the context.

- Values capture the underlying meaning of the input tokens.

The mechanism calculates attention scores by comparing the queries with the keys. These scores are then used to weight the values, resulting in an output representation that emphasises the most important input tokens for the current context.

Scaled Dot-Product Attention

Scaled Dot-Product Attention is a mathematical implementation of the self-attention mechanism. It emphasises the relationship between input tokens while reducing the computational complexity compared to other attention approaches.

The process involves the following steps:

1. Compute the dot product between the query and key matrices.

2. Divide the result by the square root of the key dimension to scale down the dot products.

3. Apply a softmax function to normalise the attention scores.

4. Multiply the normalised scores with the value matrix to obtain the output.

This approach enables the Transformer model to efficiently attend to the most relevant input tokens, making the model more expressive and powerful in generating context-aware representations.

To summarize, the attention mechanism in Transformer models relies on the self-attention mechanism and scaled dot-product attention to efficiently process input sequences and focus on the most important tokens in the context. These innovations make transformers a powerful tool for a wide range of natural language processing tasks.

Transformer model training

Data Preparation

Before diving into training a Transformer model, you need to prepare your dataset. This process typically involves tokenising the text, representing words or subwords as numbers, and creating batches of training data. Consider three main steps:

1. Tokenisation: converting text into individual tokens (words, subwords, or characters).

2. Numericalisation: mapping tokens to their corresponding unique IDs.

3. Batching: dividing the dataset into smaller chunks to feed into the model during training.

Additionally, it's essential to apply a padding mask to the input sequences, ensuring that the model doesn't consider padding tokens when calculating loss and accuracy.

The training process

The training of a Transformer model consists of iterating through the dataset and fine-tuning the model's weights based on the loss function, which measures the difference between the model's predictions and the actual target labels. Below are the primary components of the training process:

- Loss calculation: compute the loss by comparing the model's output against the ground truth.

- Backpropagation: Update the model's weights by minimising the loss using gradient descent.

During training, it's crucial to employ various techniques to improve the model's performance and prevent overfitting.

1. Learning rate scheduling: Adjust the learning rate over time, following a specified schedule.

2. Dropout: Randomly omit a subset of the model's connections during training, promoting generalisation.

3. Layer normalisation: normalise the inputs across the features, improving training speed and stability.

By following these steps and incorporating the mentioned techniques, you'll be well on your way to training a high-performing Transformer model.

Applications of Transformers

In this section, you will learn about the various applications of transformer models in fields like natural language processing and beyond.

Natural language processing

Transformers have significantly impacted the field of natural language processing (NLP), offering state-of-the-art performance in several tasks. Below are some common NLP applications where transformers excel:

1. Machine Translation: Transformers have shown remarkable results in translating text from one language to another, surpassing traditional recurrent neural architectures like LSTM.

2. Text Summarisation: Given a lengthy document or article, transformer models can efficiently produce a concise summary while preserving the original's key points.

3. Sentiment Analysis: Identifying the sentiment or emotion behind a user's text (e.g., positive, negative, or neutral) is a crucial task for modern customer interaction systems, and transformers have achieved remarkable accuracy in this area.

4. Question Answering: Transformers enable virtual assistants and chatbots to provide accurate and contextually relevant answers to user queries.

Example: The GPT-3 model, powered by a transformer architecture, stands out for its impressive natural language understanding and generation capabilities.

Beyond Language Processing

While transformers were initially designed for NLP tasks, their versatility has led to successful applications in various other domains. Here are two notable examples:

1. Computer Vision: Visual Transformers (ViT) is an innovative approach that uses transformers for image recognition tasks. By breaking images into smaller patches and linearising them, ViT can analyse visual patterns in a more context-aware manner, resulting in improved performance compared to traditional convolutional neural networks (CNNs).

2. Recommendation Systems: Transformers have been employed to build advanced recommendation systems by exploiting their ability to capture complex relationships between users and items, irrespective of the order in which the interactions occurred.

In summary, transformers' flexibility and powerful attention mechanisms have enabled their use in various applications, leading to continuous advancements and innovations across multiple domains.

Complications and Restrictions

Computational Requirements

Transformer models, although highly effective, do come with their own set of challenges. One of the most significant issues is their computational requirements. These models often require vast amounts of memory and computational power, which can lead to long training times. For example:

- Memory requirements increase with the number of layers and attention heads.

- Training can be time-consuming, especially for large-scale datasets and models.

As a result, not all users may have access to the necessary resources to train and deploy large transformer models.

Interpretability Issues

Another challenge that comes with transformer models is the interpretability issue. While transformers excel in various natural language processing tasks, understanding how they arrive at certain outcomes or decisions can be difficult. Some specific concerns include:

- The attention mechanism, although helpful in understanding relationships between tokens, can be complex and hard to grasp.

- It can be challenging to identify the most critical features of the input data responsible for specific outcomes.

Despite these challenges, transformer models continue to revolutionise the deep learning and natural language processing domains. As you work with these models, always be mindful of their limitations and potential areas for improvement.

Frequently Asked Questions

What are the fundamental building blocks of Transformer models in machine learning?

How do transformers facilitate natural language processing (NLP) tasks?

What steps are involved in the functioning of a Transformer model?

In what ways are Transformer models utilised across various AI applications?

What distinguishes BERT from other Transformer-based models?

Can you provide an example of how transformer architectures are leveraged in deep learning?

THE EASIEST WAY TO BUILD YOUR OWN AI CHATBOT

In less than 5 minutes, you could have an AI chatbot fully trained on your business data assisting your Website visitors.